There are 2 things developers hate, testing and documentation. I know it doesn’t matter how important they are, they are just boring ?

But I look at them as an investment, 10 minutes of testing = hour saved of debugging, and the only thing worst than debugging is debugging when it’s in production.

There are 2 main types of testing:

- Unit

- End to End

they both add value but its key to do them both right else you endup with duplication and waste

1. Unit

Unit testing is a module approach and focuses on part of the solution. They should be completed by the developer during the build. These tests often start off small and grow with the solution, but they should only grow to a point. The key thing is to identify the limits of the unit test, too small and it takes too much time to test, to big and it takes to long to debug, you need to find the sweet spot.

How you design and build your flow has the biggest impact on your testing, if you build one huge monolith flow then it is going to be hard. The best approach is to cap your flows with less than 50 actions and have child flows with predefined inputs/outputs. That way the unit test is simple, does the input get the expected output. As the code is ring fenced from the other flows, if it fails/needs an update, we only need to test that one flow.

During the build we have a couple of tricks/tools we can use to help with our unit test.

Test Flow

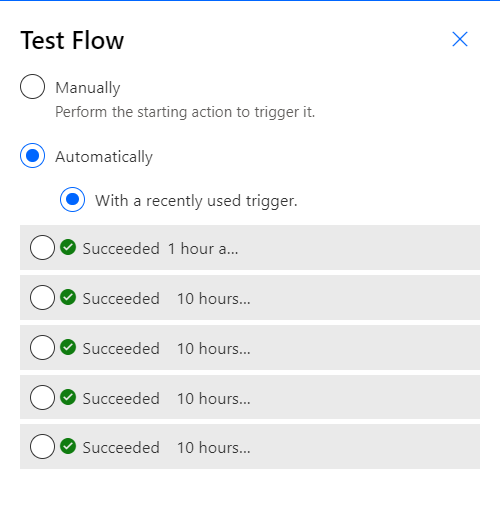

Obvious one but I have met far too many people who don’t know about it. You have 2 options, manual and automatic. If its not a manual trigger flow then the manual test just ups the trigger polling

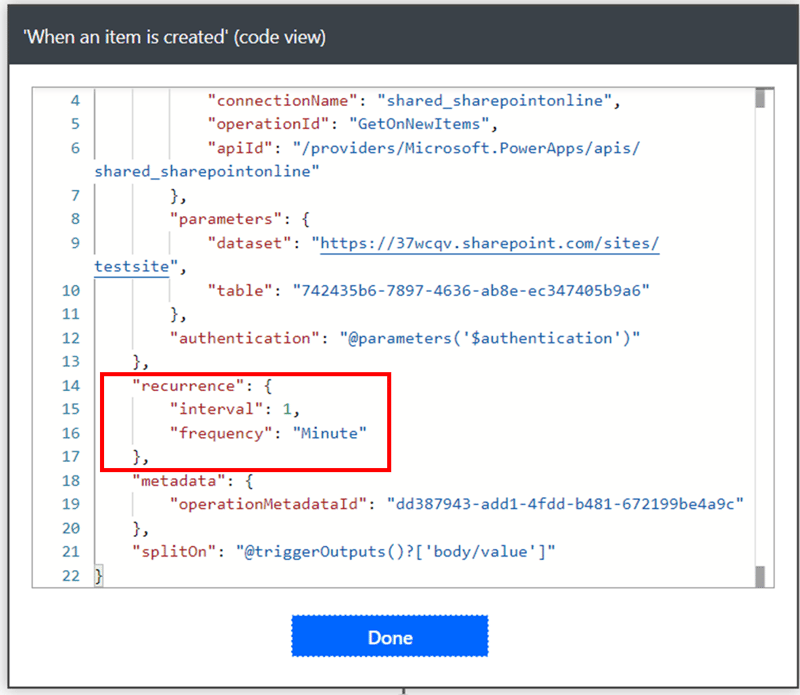

In a nutshell a automatic trigger is actually a scheduled api that continually asks “should I run yet?”. So On Email will keep asking the Outlook api, “do you have any emails yet?” The schedule can be seen if you peak the code of the trigger. It is dependent on your license, connector and is a worst case (if Microsoft has the capacity it will check more). Running a test changes the schedule to every second.

The automatic test reuses a previous run, so much better than continually sending emails to test a New Email triggered flow.

The problem with this is that the flow will impact things outside of the flow (e.g. delete an email), which may then cause the next test to fail (as you can’t delete it twice), and it always runs to the end, even if that part isn’t finished. Fortunately we have 2 ways to mitigate these.

Static Result

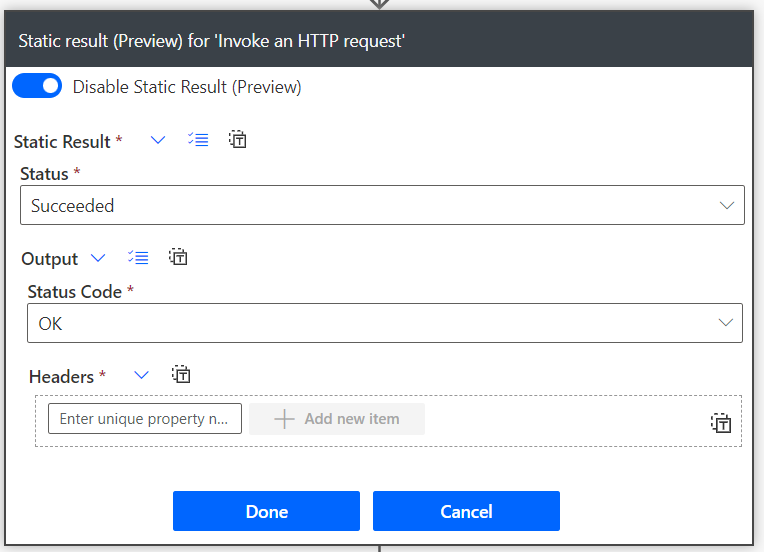

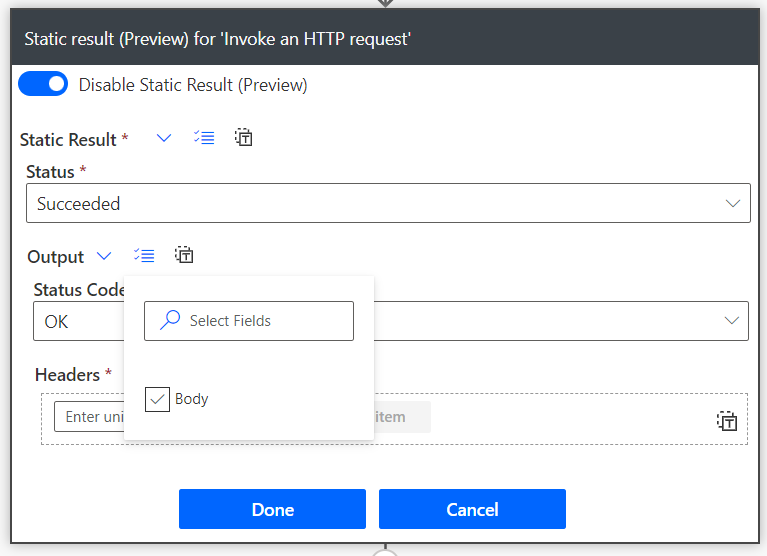

Warning this is still in preview (so subject to change, but as its a dev tool I have less worries about using it). Static results mean you can tell a action to return a value without actually leaving the flow.

So in my delete email example, it will say it has deleted the email and continue, but not actually deleted it. It’s also perfect for exception handling, as you can force the action to fail even if inputs are valid.

You can return a set body too, just copy the output from a previous run history and add it in.

Setting static results has 2 other big benefits:

- Speed – it is quicker compared to waiting for a api response

- Consistency – you remove a variable from the test (no more scratching head as why suddenly broken only to find external update to api/data)

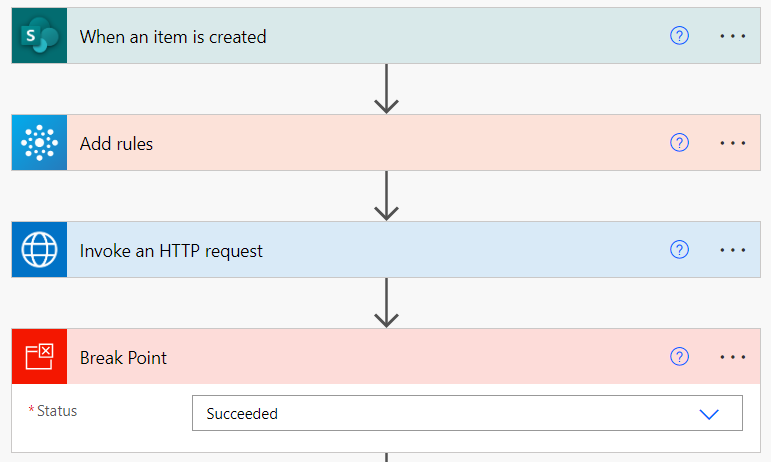

Terminate Action

The terminate action ends the flow at that point, so we can drop it in whenever we want the flow to stop running. I name mine as ‘Break Point’ so that I can do a quick search to make sure I don’t miss them when finished development.

Hopefully a future update may add a break point like the Static Results so that we can just click a setting on an action instead of having to add an action.

2. End to End

End to End checks the entire process (I know the name kind of gives it away). The key thing for this testing is:

- Testing Plan

- Segregation of Duties

Testing Plan

You should never do End to End testing without a testing plan. A testing plan ensures that everything is tested and the expected result is always achieved.

It needs to cover

- All expected inputs

- All possible inputs (grouped and within reason)

- Happy path results

- Exception path results

The happy path is what we want to happen, the exception path is what we want to happen if something bad happens.

The test plan should show be broken up to test scenarios which cover everything.

As an example I have a flow that “on email arrives, it attachment is a Powerpoint file then save to a folder on my OneDrive”, My test plan would be

| Scenario | Expected Behaviour |

|---|---|

| Email with PowerPoint file | File saved to OneDrive Folder |

| Email with Excel file | File not save to OneDrive Folder |

| Email with no Attachment | File not saved o OneDrive Folder |

| Email with multiple PowerPoint files | All files saved to OneDrive |

| Email with multiple PowerPoint and Excel files | All PowerPoint files only saved to OneDrive |

| Email with PowerPoint file already saved | Old file replaced with new file in OneDrive Folder |

| Emails with PowerPoint file but OneDrive folder deleted | Mailbox owner notified by teams, email moved to exception mailbox folder |

Segregation of Duties

The End to End tester should not be the developer, there has to be a clear separation of duties to maintain the integrity of the test. Ideally the tester will be from the business (that’s why it can also be known as User Acceptance Test) but at the very least not someone in the same team / line manager. This may seem over the top but:

- If you have made it and tested it for hours human nature dictates you will either cut corners or look on all results in the most positive way

- If the person testing is impacted/influenced by deployment deadline they will more likely look on all results in the most positive way

No matter how much of a pain it can be, testing as so much value, and in the long run it is always worth.